In the world of Machine Learning (ML) and Artificial Intelligence (AI), the quality of the final model is a direct reflection of the quality of its training data. And at the heart of quality data lies data annotation. If data annotation is the process of labeling raw data to give AI a sense of “sight” or “understanding,” then the Data Annotation Rubric is the non-negotiable set of rules that governs that process. It is the single most critical document that ensures consistency, accuracy, and fidelity across millions of data points, bridging the gap between human understanding and machine logic.

More than ever, annotators are required to master rubrics, and many annotation platforms ask freelancers to learn and apply the rules very quickly and precisely. This article will tackle this important topic by explaining what rubrics are and why they matter and, as usual, proposing some tips and recommendations.

Whether you’re a beginner just starting your journey as a freelance annotator or a seasoned data scientist struggling to scale your quality assurance (QA) process, mastering the rubric is the key to unlocking better models and better career opportunities.

Basic Concepts: What is a Data Annotation Rubric?

A data annotation rubric is a structured scoring system or checklist used to assess the quality of labels applied to data based on predefined, objective criteria. Think of it as the ultimate source of truth, moving beyond general project guidelines to provide granular, measurable standards for what constitutes a “correct” or “high-quality” annotation.

While Annotation Guidelines tell you how to annotate (e.g., “Use a bounding box for cars”), the Rubric tells you how well the annotation meets the project’s quality bar (e.g., “A bounding box must be snug to the object with a maximum of 3 pixels of padding”).

The Core Components of a Rubric

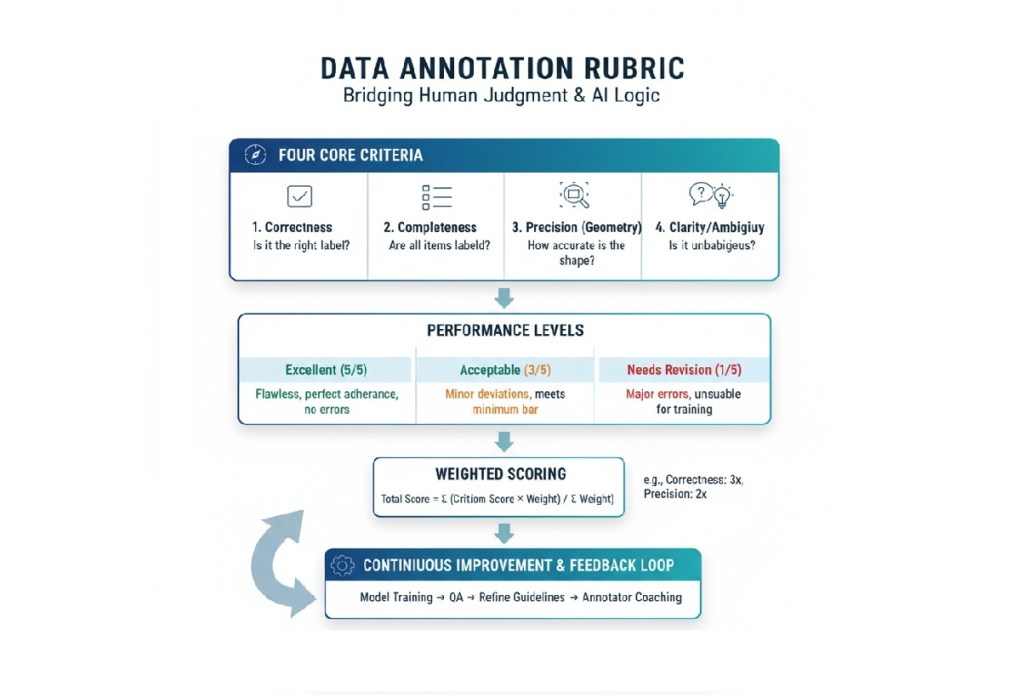

Rubrics break down the abstract concept of “quality” into quantifiable dimensions. While every project is unique, a solid rubric typically evaluates these four core criteria:

| Rubric Criterion | Question it Answers | Example for an Image Bounding Box Task |

| Correctness | Does the label/class match the object in the data? | Is the object labeled ‘Truck’ actually a truck, or is it a bus? |

| Completeness | Are all required features or entities labeled? | Are all pedestrians in the frame labeled, or was one missed? |

| Precision (Geometry) | Is the shape/location of the annotation accurate? | Is the bounding box tight around the object, or does it include too much background space? |

| Clarity/Ambiguity | Is the annotation clear and unambiguous for downstream use? | Does the annotator use the ‘Unsure’ tag correctly for blurry images, or is a clear object incorrectly flagged as ‘Unsure’? |

A good rubric will not only define these criteria but will also include performance levels (e.g., Excellent, Acceptable, Needs Revision) with detailed, descriptive text for each level, making quality assessment objective rather than subjective.

Why Rubrics are Non-Negotiable in ML/AI

In the high-stakes environment of AI development—where data errors can lead to everything from frustrating user experiences to dangerous outcomes in self-driving cars or medical diagnostics—rubrics are essential for both people and models. Here are three key points to consider.

The Bedrock of Model Accuracy

Garbage In, Garbage Out (GIGO). Your machine learning model is only as smart as the data you feed it. Data errors can reduce AI performance by up to 30%. A robust rubric ensures the data used for training is high-fidelity Ground Truth.

- Establishing Ground Truth: The rubric defines the “correct answer” the model learns from. Without a uniform definition of “correct,” the model trains on noisy, inconsistent data, leading to poor generalization.

- Reducing Bias: A detailed rubric helps spot and mitigate subtle human biases or subjective interpretations by forcing annotators to adhere to objective, measurable standards. For instance, in sentiment analysis, a rubric clarifies the line between ‘Neutral’ and ‘Slightly Positive’ with explicit examples.

Consistency Across the Workforce

Data annotation projects often involve large teams, sometimes hundreds or thousands of annotators and Quality Assurance (QA) specialists. Different people have different interpretations.

- Standardized Training: For beginners, the rubric is the primary training document. It provides a clear, single source of truth for learning the task, dramatically shortening the ramp-up time and ensuring everyone starts with the same quality standard.

- Inter-Annotator Agreement (IAA): Rubrics are the tool QA teams use to measure IAA. If two annotators label the same data point, their scores on the rubric should be close. Wide deviations signal an issue with the annotator’s understanding or, more critically, an ambiguity in the guideline itself.

Efficiency in the Human-in-the-Loop Workflow

For project managers and data scientists, the rubric is a powerful QA tool that goes beyond simple statistical metrics (like overall accuracy).

- Qualitative Feedback Loop: While a statistical score might say “80% accuracy,” the rubric explains why the remaining 20% failed (e.g., “Precision error on polygon corners” or “Missing attribute for occlusion”). This qualitative feedback is vital for the iterative refinement of both the annotation process and the model’s performance.

- Targeted Improvement: By quantifying error types, rubrics help direct re-training efforts for annotators and highlight edge cases that need to be explicitly added to the main guidelines.

Getting Started: The Beginner’s Guide to Rubrics

If you’re a new data annotator, the rubric can seem intimidating, but mastering it is the most direct path to becoming a high-performing, high-value asset.

Treat the Rubric as Your Bible

Never, ever start annotating a task without thoroughly reading the entire rubric and its accompanying guidelines.

- The Annotation Guidelines detail the what (the classes, the tools, the process).

- The Rubric details the how well (the definition of quality and what mistakes look like).

For example, a guideline might say “label all cars.” The rubric will clarify:

Criterion: Precision. Acceptable: Bounding box must be within 5 pixels of the object outline. Unacceptable: Box cuts into the object or extends more than 10 pixels outside.

Focus on the Descriptors

A rubric is a grid. Pay the most attention to the Performance Descriptors—the text blocks that describe each score level (e.g., “Excellent,” “Good,” “Poor”).

- Study the “Excellent” Column: This is the project’s goal. Memorize what perfect looks like for each criterion.

- Study the “Unacceptable” Column: These are the common pitfalls and errors. Train yourself to spot these in your own work before submission.

Annotate a Small Sample and Self-Score

Before tackling large batches, take 10-20 examples. Apply your labels, and then critique your own work using the rubric as if you were the QA lead.

| Your Annotation | Rubric Criterion | Your Self-Score | Key Takeaway |

|---|---|---|---|

| Car Bounding Box | Precision | Acceptable (3/5) | Need to be tighter; box is 7 pixels out. |

| Text Sentiment | Correctness | Excellent (5/5) | The phrase ‘not too bad’ is correctly classified as ‘Neutral.’ |

| Missing Object | Completeness | Needs Revision (1/5) | Forgot to label a partially occluded bike. Must re-read occlusion rules. |

This self-assessment builds the critical judgment that separates a fast annotator from a high-quality annotator.

Advanced Mastery: Becoming a Rubric Expert

For experienced professionals—freelancers seeking higher-paying, more complex projects or data scientists designing the QA workflow—mastering the rubric shifts from following rules to creating and refining them.

From Follower to Creator: Designing Analytic Rubrics

The most effective rubrics are typically analytic rubrics, which break quality down by multiple criteria, rather than holistic rubrics (which provide a single score). Creating one involves several key steps:

A. Align Criteria to Model Requirements

The rubric criteria must directly support what the downstream ML model needs to learn.

- Object Detection (Vision): Prioritize Precision (tight bounding boxes, accurate polygon edges) and Completeness (no missed objects).

- Named Entity Recognition (NLP): Prioritize Correctness (accurate entity classification) and Clarity (correct boundary span—not including trailing punctuation, for instance).

- Medical or Legal Data: High emphasis on Correctness and Consistency, often requiring subject matter expert (SME) validation.

B. Define the Levels of Performance

Use clear, measurable, and actionable language for the performance levels. Avoid vague terms.

| Performance Level | Example Descriptor (for Polygon Precision) |

| Gold Standard (5) | The polygon follows the visible object perimeter with zero pixel deviation except where occlusion occurs. |

| Acceptable (3) | The polygon follows the perimeter but has a maximum of 2-pixel deviation or minor corner rounding. |

| Needs Re-Annotation (1) | The polygon cuts into the object or extends more than 3 pixels past the perimeter. |

C. Implement Adjudication and Weighting

In large-scale projects, not all errors are equal. The rubric must reflect this via a weighted scoring system.

- Critical Errors: Errors that could lead to model failure (e.g., Correctness errors, such as mislabeling a pedestrian as a traffic light) should carry a higher weight (e.g., $3 \times$ multiplier).

- Minor Errors: Errors that are less likely to impact model performance (e.g., slight aesthetic imperfections in a bounding box) should carry a lower weight.

The rubric should also include an Adjudication Strategy to resolve conflicts when multiple annotators disagree on a label. This might involve a consensus vote or sending the data point to a designated Domain Expert for final “Gold Label” creation.

Using Rubrics to Elevate Freelancer Proficiency

For a freelance data annotator, moving beyond simple task completion to true proficiency means higher pay, more complex work, and greater job security. The rubric is your secret weapon.

| Skill Development Area | How the Rubric Guides Improvement |

| Attention to Detail | Internalize the Precision Criteria. Instead of simply labeling, you are now performing a quality check on your own work against the high standard set in the rubric. This shift from labeler to QA specialist is invaluable. |

| Time Management | Identify Your Bottlenecks. When you self-score, note which criteria you struggle with and how much time you spend on them. If precision takes too long, practice geometry tools. If completeness is an issue, develop a systematic scanning pattern. |

| Critical Thinking | Master the Edge Cases. High-value tasks often revolve around ambiguity (e.g., is a partially obscured item visible enough to label?). The rubric forces you to think critically, applying specific rules to unique, complex scenarios. You move from what is it? to how does the rule apply here? |

| Communication | Clarity in Queries. When you encounter a truly ambiguous data point, your communication with the project manager should reference the rubric. Instead of “I’m confused,” you say: “On item #123, the object meets the visibility threshold for ‘Occluded,’ but the geometry violates the ‘Minimum Pixels’ rule. Should I prioritize the bounding box rules or the visibility rules?” This level of specificity marks you as a true professional. |

Advanced Rubric-Related Techniques for Pros

- “Gold Task” Creation: Professional QA annotators are often tasked with creating a set of Gold Standard tasks—data points that are perfectly labeled according to the rubric. These are later used to test and score other annotators. Mastering this means you fully understand the ultimate standard of quality.

- Error Analysis & Feedback: Beyond simply annotating, offer to perform error analysis on a team’s completed work. Use the rubric to categorize and quantify the frequency of errors. This service is a high-value skill that elevates you from an annotator to a Data Quality Analyst.

- Tool Mastery: Proficiency isn’t just knowing the rules; it’s using the annotation tool flawlessly to meet the geometric standards of the rubric. Can you snap a bounding box to a polygon, or use automated tracking while maintaining the required pixel precision?

The Rubric as a Quality Assurance Tool

For project leads and data scientists, the rubric is the framework for a robust QA process. Its implementation is what protects the integrity of the training data.

Inter-Annotator Agreement (IAA) Scoring

IAA is the statistical measure of how often different annotators agree on the label for the same piece of data.

- Using the Rubric: When two annotators score the same data point, a discrepancy in their rubric scores immediately flags the item for review. A high IAA score across all criteria means the rubric (and guidelines) are clear and the annotators are well-trained. A low score indicates a flaw in the project design.

- Kappa Score: For classification tasks, the Cohen’s Kappa or Fleiss’ Kappa score is often used. The rubric serves as the qualitative guide to interpret why the Kappa score is low—is it a problem with Correctness or Completeness?

The Active Learning Feedback Loop

In modern AI workflows, annotation is not a one-time step but a continuous loop.

- Annotate: A batch of data is labeled.

- QA with Rubric: The rubric scores are used to identify high-error data points (failures in Correctness, Precision, etc.).

- Refine Guidelines: The frequent errors identified by the rubric are used to clarify ambiguous rules in the original guidelines.

- Model Training: Only the high-quality, rubric-validated data is used to train the model.

- Active Learning: The model is deployed to pre-label new data. The rubric is then used to QA the model’s automated annotations, ensuring the automated work meets the human-defined quality standard.

Final Thoughts

As AI models become more complex (e.g., multimodal, generative AI), the annotation tasks become increasingly subjective (e.g., ranking conversational quality, assessing ethical alignment). This shift makes the qualitative judgment enabled by a strong rubric more crucial than ever before.

The most successful data annotators and data teams will be those who view the rubric not as a punitive checklist, but as the scientific definition of data quality. Mastering its criteria, applying them consistently, and even participating in their creation is how you ensure that your contribution to the ML pipeline is foundational, reliable, and high-value.

What about your experience with rubrics? Comment and share your thoughts below!

🎓Want to practice on this and other topics? Check out our Data Annotation crash course! (Click here)

Share your experience and your comments